CVE-2021–20226 a reference counting bug which leads to local privilege escalation in io_uring

Posted on June 21, 2021 • 23 minutes • 4753 words

Table of contents

Hello, I’m Shiga( @Ga_ryo_ ), a security engineer at Flatt Security Inc.

In this article, I would like to give you a technical description of CVE-2021–20226( ZDI-2021–001 ) which is published before. I discovered this vulnerability and reported it to the vendor via the Zero Day Initiative. This article is not intended to inform you of the dangers of vulnerabilities, but to share tips from a technical point of view.

An overview of the vulnerabilities and attack methods can be found at the links below. This blog will explain in a little more detail.

Notes

If you have any questions or found any mistakes, I’d appreciate it if you could contact me individually. And, the code in this article basically refers to the Linux Kernel source code at Linux kernel 5.6.19 .

io_uring is one of the actively updated features as of 2021, and the information changes as the version changes (many changes have been made since the time I discovered it). Therefore, please note that the information is not up-to-date even at the time of writing the blog.

General terms/knowledge in the Linux Kernel are not explained in this blog.

I will explain the outline of the PoC I wrote, but I will not post the actual code.

Overview

Preconditions

Arbitrary code(command) execution in the system.

Impact

Privilege escalation to root.

What is io_uring

Rough explanation

Roughly speaking, io_uring is the latest asynchronous I/O(Network/Filesystem) mechanism.

Please refer to some blogs/slides posted on the Internet for specs and detailed descriptions from the user’s perspective.

From here, I will continue to explain the outline of io_uring on the assumption that you understand it.

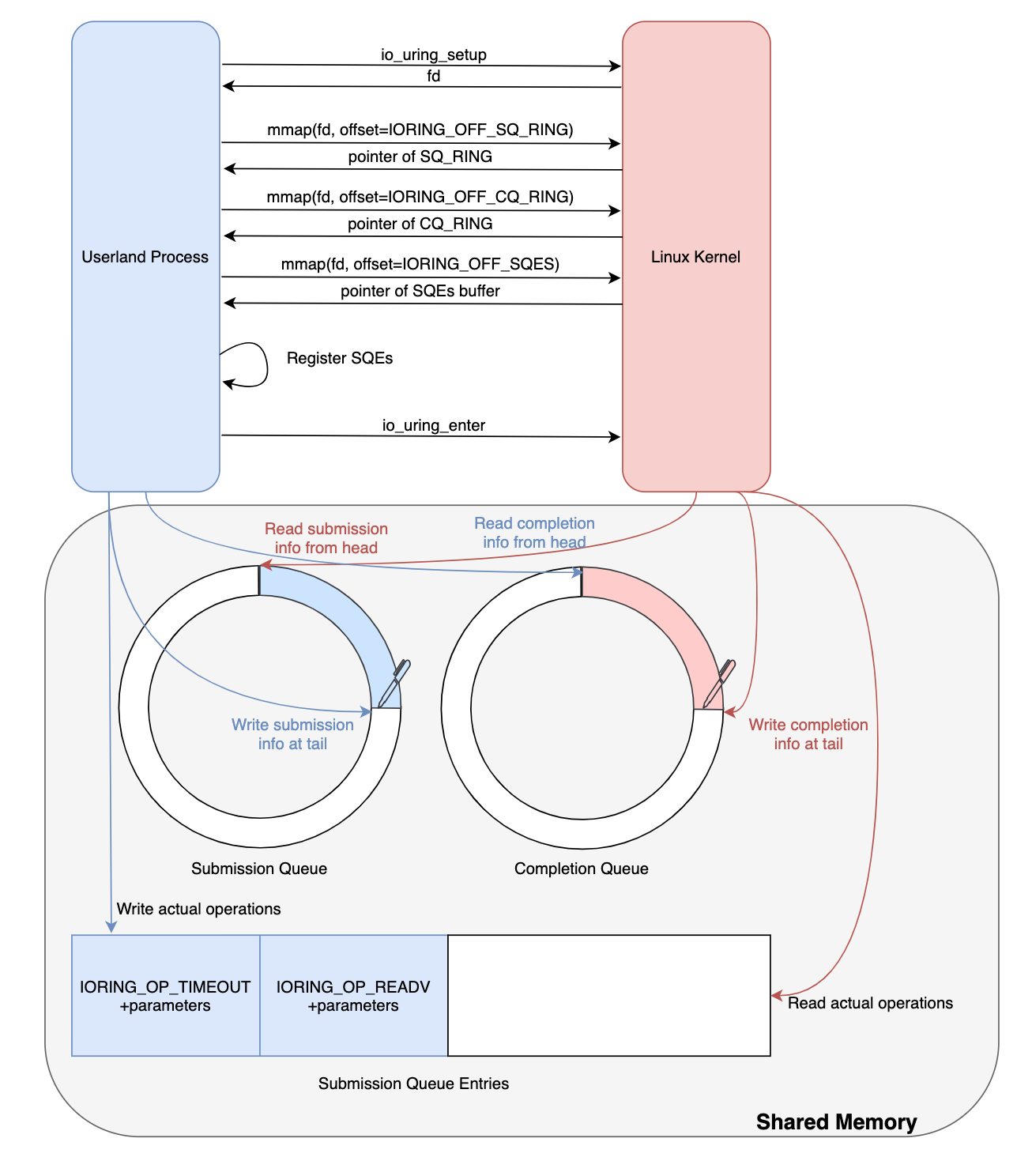

In io_uring, a file descriptor is first generated by a dedicated system call (io_uring_setup), and by issuing mmap() system call to it, Submission Queue(SQ) and Completion Queue(CQ) are mapped/shared in userspace memory.

This is used as ring buffer by both sides(Kernel/Userspace).

Entries for each system call such as read/write/send/recv are registered by writing SQE(Submission Queue Entry) to the shared memory.

And then execution is started by calling io_uring_enter().

Asynchronous execution

By the way, the important part this time is the implementation of asynchronous execution, so I will focus on that.

To explain it first, io_uring is not always executed asynchronously, but it is executed asynchronously as needed.

Please refer to the code below first.(After this, the Kernel v5.8 will be used to explain the behavior. The behavior may be slightly different from your environment.)

#define _GNU_SOURCE

#include <sched.h>

#include <stdio.h>

#include <string.h>

#include <stdlib.h>

#include <signal.h>

#include <sys/syscall.h>

#include <sys/fcntl.h>

#include <err.h>

#include <unistd.h>

#include <sys/mman.h>

#include <linux/io_uring.h>

#define SYSCHK(x) ({ \

typeof(x) __res = (x); \

if (__res == (typeof(x))-1) \

err(1, "SYSCHK(" #x ")"); \

__res; \

})

static int uring_fd;

struct iovec *io;

#define SIZE 32

char _buf[SIZE];

int main(void) {

// initialize uring

struct io_uring_params params = { };

uring_fd = SYSCHK(syscall(__NR_io_uring_setup, /*entries=*/10, ¶ms));

unsigned char *sq_ring = SYSCHK(mmap(NULL, 0x1000, PROT_READ|PROT_WRITE,

MAP_SHARED, uring_fd,

IORING_OFF_SQ_RING));

unsigned char *cq_ring = SYSCHK(mmap(NULL, 0x1000, PROT_READ|PROT_WRITE,

MAP_SHARED, uring_fd,

IORING_OFF_CQ_RING));

struct io_uring_sqe *sqes = SYSCHK(mmap(NULL, 0x1000, PROT_READ|PROT_WRITE,

MAP_SHARED, uring_fd,

IORING_OFF_SQES));

io = malloc(sizeof(struct iovec)*1);

io[0].iov_base = _buf;

io[0].iov_len = SIZE;

struct timespec ts = { .tv_sec = 1 };

sqes[0] = (struct io_uring_sqe) {

.opcode = IORING_OP_TIMEOUT,

//.flags = IOSQE_IO_HARDLINK,

.len = 1,

.addr = (unsigned long)&ts

};

sqes[1] = (struct io_uring_sqe) {

.opcode = IORING_OP_READV,

.addr = io,

.flags = 0,

.len = 1,

.off = 0,

.fd = SYSCHK(open("/etc/passwd", O_RDONLY))

};

((int*)(sq_ring + params.sq_off.array))[0] = 0;

((int*)(sq_ring + params.sq_off.array))[1] = 1;

(*(int*)(sq_ring + params.sq_off.tail)) += 2;

int submitted = SYSCHK(syscall(__NR_io_uring_enter, uring_fd,

/*to_submit=*/2, /*min_complete=*/0,

/*flags=*/0, /*sig=*/NULL, /*sigsz=*/0));

while(1){

usleep(100000);

if(*_buf){

puts("READV executed.");

break;

}

puts("Waiting.");

}

}

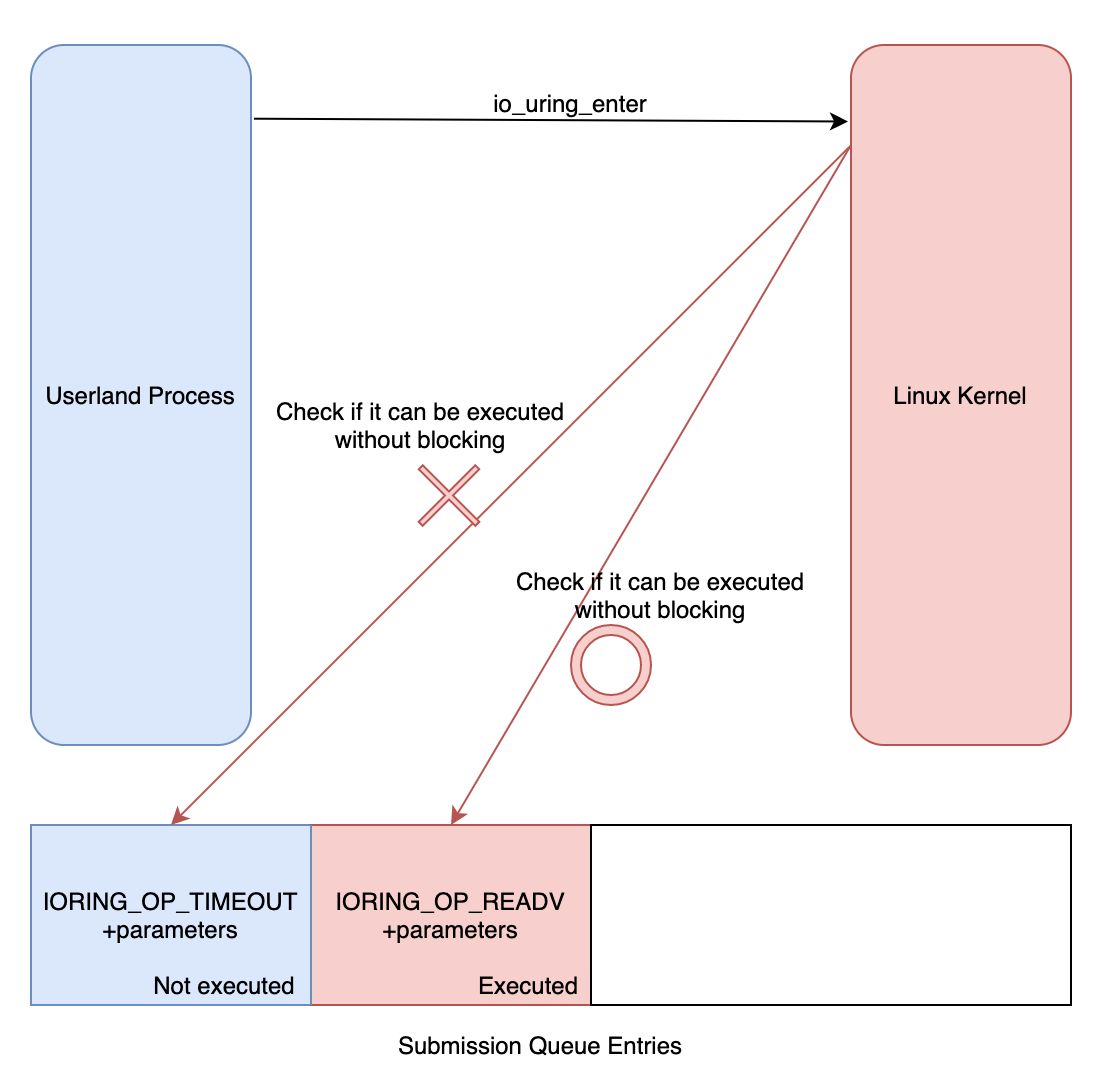

In this code, after performing the necessary setup for the operations IORING_OP_TIMEOUT and IORING_OP_READV, it starts execution and then checks every 0.1 seconds to see if readv() is complete.

It seems that readv() will be completed after 1 second, considering that it is executed in the order of ring buffer. However, when I actually run it, the result was as follows.

$ ./sample

READV executed.

That is, execution of readv() was completed immediately.

This is because, as I said earlier, it is executed asynchronously as needed, but in this case an execution of readv() can be completed immediately (because it is known that its execution does not stop). So subsequent operation was compeleted first (IORING_OP_TIMEOUT was ignored for the time being).

As a test, check that readv() is executed synchronously (= in the handler of the system call) with the following systemtap[¹] script.

[¹]: A tool that allows you to flexibly execute scripts, such as tracing Kernel (but not only) functions and outputting variables at the traced points. I love this tool because Kernel debugging is a hassle.

#!/usr/bin/stap

probe kernel.function("io_read@/build/linux-b4NE0x/linux-5.8.0/fs/io_uring.c:2710"){

printf("%s\n",task_execname(task_current()))

}

↓ This is the output when the previous program (name of the file is sample) is executed while above systemtap script is being executed. If it is asynchronous, it is easy to imagine that the execution task is registered in some worker, but since it is executed synchronously here, the name of the executable file which called the system call is printed.

$ sudo stap -g ./**sample**.stp

**sample**

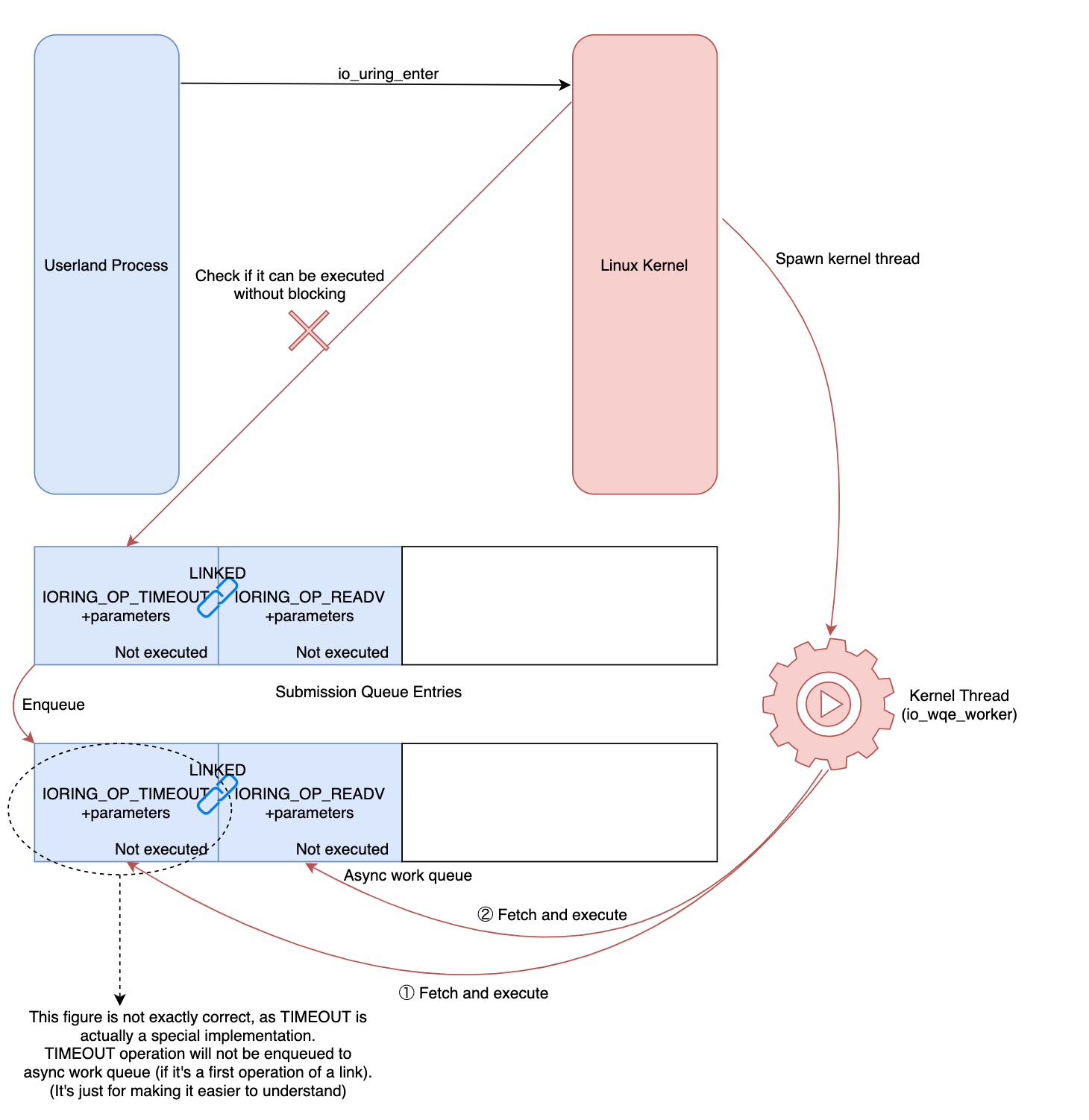

So where did IORING_OP_TIMEOUT go? The answer is “passed to the Kernel Thread because it was determined that asynchronous execution was needed”. There are several criteria for this, and if they meet, they will be enqueued into the Queue for asynchronous execution. Here are some examples.

- When the force async flag is enabled

} else if (req->flags & REQ_F_FORCE_ASYNC) {

......

/*

* Never try inline submit of IOSQE_ASYNC is set, go straight

* to async execution.

*/

req->work.flags |= IO_WQ_WORK_CONCURRENT;

io_queue_async_work(req);

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L4825

- Decisions by the logic prepared for each operation. (e.g. Add IOCB_NOWAIT flag when calling

readv()and return EAGAIN if execution is expected to stop)

static int io_read(struct io_kiocb *req, struct io_kiocb **nxt,

bool force_nonblock)

{

......

ret = rw_verify_area(READ, req->file, &kiocb->ki_pos, iov_count);

if (!ret) {

ssize_t ret2;

if (req->file->f_op->read_iter)

ret2 = call_read_iter(req->file, kiocb, &iter);

else

ret2 = loop_rw_iter(READ, req->file, kiocb, &iter);

/* Catch -EAGAIN return for forced non-blocking submission */

if (!force_nonblock || ret2 != -EAGAIN) {

kiocb_done(kiocb, ret2, nxt, req->in_async);

} else {

copy_iov:

ret = io_setup_async_rw(req, io_size, iovec,

inline_vecs, &iter);

if (ret)

goto out_free;

return -EAGAIN;

}

}

......

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L2224

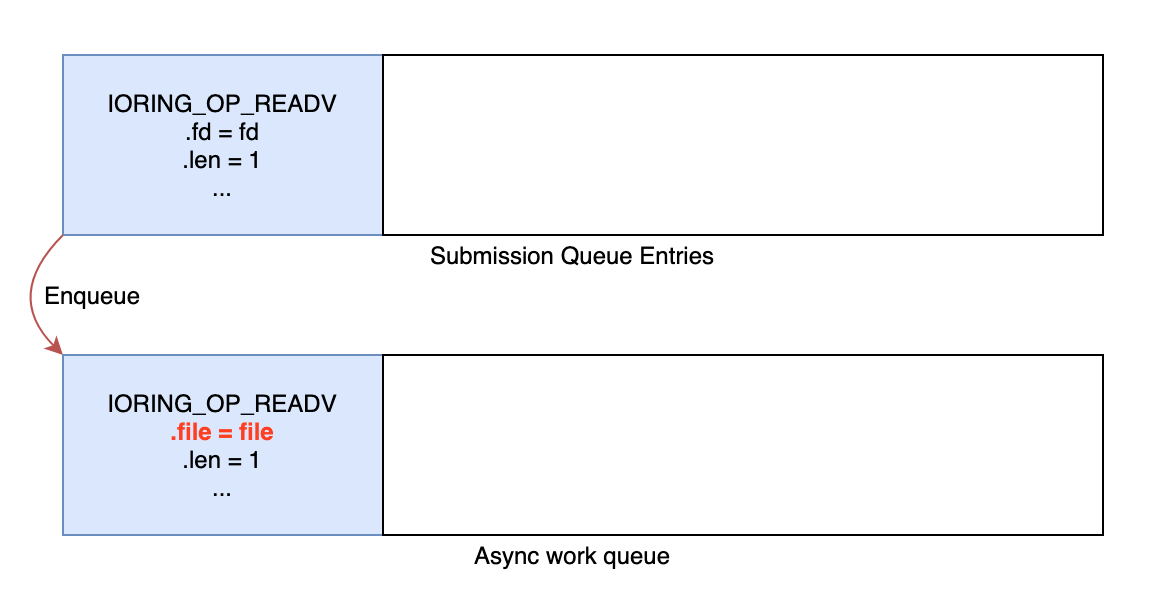

When EAGAIN is returned, it is enqueued into the Queue for asynchronous execution (if it is a type of operation that uses file descriptors, it gets references to the file structure here).

static void __io_queue_sqe(struct io_kiocb *req, const struct io_uring_sqe *sqe)

{

......

ret = io_issue_sqe(req, sqe, &nxt, true);

/*

* We async punt it if the file wasn't marked NOWAIT, or if the file

* doesn't support non-blocking read/write attempts

*/

if (ret == -EAGAIN && (!(req->flags & REQ_F_NOWAIT) ||

(req->flags & REQ_F_MUST_PUNT))) {

punt:

if (io_op_defs[req->opcode].file_table) {

ret = io_grab_files(req);

if (ret)

goto err;

}

/*

* Queued up for async execution, worker will release

* submit reference when the iocb is actually submitted.

*/

io_queue_async_work(req);

goto done_req;

}

......

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L4741

static int io_issue_sqe(struct io_kiocb *req, const struct io_uring_sqe *sqe,

struct io_kiocb **nxt, bool force_nonblock)

{

struct io_ring_ctx *ctx = req->ctx;

int ret;

switch (req->opcode) {

case IORING_OP_NOP:

ret = io_nop(req);

break;

case IORING_OP_READV:

case IORING_OP_READ_FIXED:

case IORING_OP_READ:

if (sqe) {

ret = io_read_prep(req, sqe, force_nonblock);

if (ret < 0)

break;

}

ret = io_read(req, nxt, force_nonblock);

break;

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L4314

- When the

IOSQE_IO_LINK|IOSQE_IO_HARDLINKflag is used(the execution order is specified) and the operation whose execution order is earlier is determined to require asynchronous execution.

(Connect as a link as described in the code below, execute in order, and if condition 2 is met in the middle, whole link will be enqueued into the asynchronous execution queue)

static bool io_submit_sqe(struct io_kiocb *req, const struct io_uring_sqe *sqe,

struct io_submit_state *state, struct io_kiocb **link)

{

......

/*

* If we already have a head request, queue this one for async

* submittal once the head completes. If we don't have a head but

* IOSQE_IO_LINK is set in the sqe, start a new head. This one will be

* submitted sync once the chain is complete. If none of those

* conditions are true (normal request), then just queue it.

*/

if (*link) {

......

list_add_tail(&req->link_list, &head->link_list);

/* last request of a link, enqueue the link */

if (!(sqe_flags & (IOSQE_IO_LINK|IOSQE_IO_HARDLINK))) {

io_queue_link_head(head);

*link = NULL;

}

} else {

......

if (sqe_flags & (IOSQE_IO_LINK|IOSQE_IO_HARDLINK)) {

req->flags |= REQ_F_LINK;

INIT_LIST_HEAD(&req->link_list);

if (io_alloc_async_ctx(req)) {

ret = -EAGAIN;

goto err_req;

}

ret = io_req_defer_prep(req, sqe);

if (ret)

req->flags |= REQ_F_FAIL_LINK;

*link = req;

} else {

io_queue_sqe(req, sqe);

}

}

return true;

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L4858

Strictly speaking, IORING_OP_TIMEOUT is a little special and does not return EAGAIN like shown in 2. But (I think) it is easy to understand, so I use it as a sample.

As shown below, by linking an operation that requires asynchronous execution (IORING_OP_TIMEOUT) with another operation, you can see that the previous IORING_OP_READV is certainly executed after waiting for 1 second.

Add IOSQE_IO_HARDLINK flag to the IORING_OP_TIMEOUT operation in the sample code above to clarify that it is linked to the subsequent operation.

48c48

< //.flags = IOSQE_IO_HARDLINK,

---

> .flags = IOSQE_IO_HARDLINK,

Execution result

$ ./sample

Waiting.

Waiting.

Waiting.

Waiting.

Waiting.

Waiting.

Waiting.

Waiting.

Waiting.

READV executed.

At this time, if you display the name of the process that is executing io_read() in the same way as before, you will get the following output.

$ sudo stap -g ./sample.stp

io_wqe_worker-0

As you can see by looking at the process list, this is a Kernel Thread.

$ ps aux | grep -A 2 -m 1 sample

garyo 131388 0.0 0.0 2492 1412 pts/1 S+ 19:03 0:00 ./sample

root 131389 0.0 0.0 0 0 ? S 19:03 0:00 [io_wq_manager]

root 131390 0.0 0.0 0 0 ? S 19:03 0:00 [io_wqe_worker-0]

Hereafter, this Kernel Thread will be referred to as a “worker”. This worker is generated by the following code and then, dequeues and executes the asynchronous execution tasks from Queue.

static bool create_io_worker(struct io_wq *wq, struct io_wqe *wqe, int index)

{

......

worker->task = kthread_create_on_node(io_wqe_worker, worker, wqe->node,

"io_wqe_worker-%d/%d", index, wqe->node);

......

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io-wq.c#L621

Aside: As explained earlier,

IORING_OP_TIMEOUTbehaves slightly differently from the figure below, but it is described as such for simplicity. Strictly speaking, whenio_timeout()is called, it setsio_timeout_fn()in the handler and starts the timer. After the time set by the timer has elapsed,io_timeout_fn()is called to load the operations connected to the link in the asynchronous execution queue. In other words,IORING_OP_TIMEOUTitself is not enqueued in the asynchronous execution queue. TIMEOUT is used in the explanation so that it is easy to imagine that execution will stop.

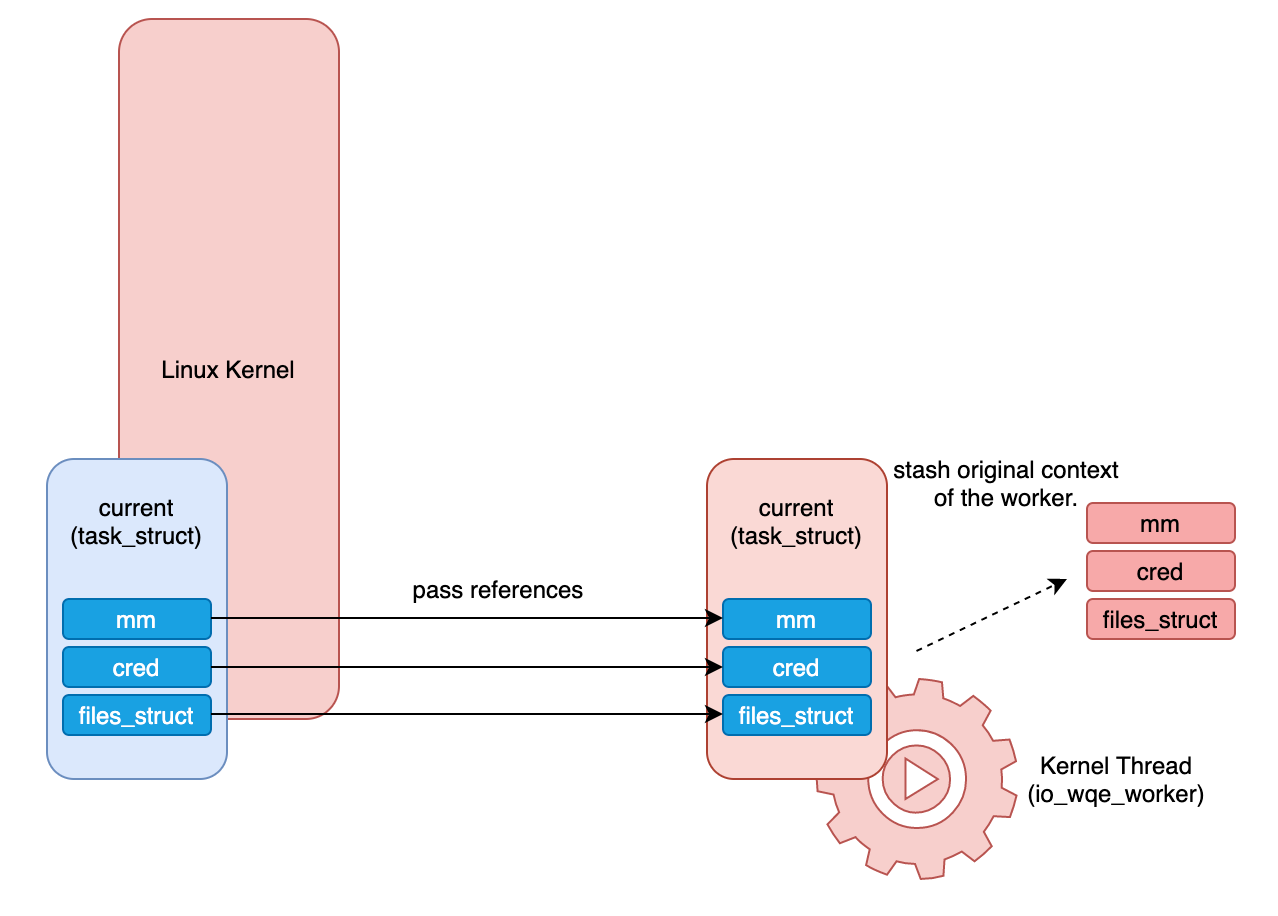

Precautions when offloading I/O operations to the Kernel

It was found out that asynchronous processing is performed by a worker running as a Kernel Thread. However, there is a precaution here. Since worker is runninng as a Kernel Thread, the execution context is different from the thread which calls io_uring related system calls.

Here, the “execution context” means the task_struct structure associated with the process and various information associated with it.

For example, mm

(Manage the virtual memory space of the process) , cred

(holds UID/GID/Capability),files_struct

(holds a table for file descriptors. There’s an array of file structure in files_struct structure, and file descriptor is its index) and so on.

Of course, if it doesn’t refer to these structures in the thread that calls the system call, it may refer to the wrong virtual memory or file descriptor table, or issue I/O operations with Kernel Thread privileges (≒ root) [²].

[²]: By the way, this was an actual vulnerability, and at that time it forgot to switch cred, and operations were able to be executed with root privileges. Although the operation equivalent to open open() was not implemented at that time, it was possible to notify the privilege in sendmsg’s SCM_CREDENTIALS option that notifies the sender’s authority. It is a problem around D-Bus because the authority is confirmed by it. https://www.exploit-db.com/exploits/47779

Therefore, in io_uring, those references are passed to the worker so that the worker shares the execution context by switching its own context before execution. For example, you can see that then references to mm and cred are passed to the req->work in the following code.

static inline void io_req_work_grab_env(struct io_kiocb *req,

const struct io_op_def *def)

{

if (!req->work.mm && def->needs_mm) {

mmgrab(current->mm);

req->work.mm = current->mm;

}

if (!req->work.creds)

req->work.creds = get_current_cred();

if (!req->work.fs && def->needs_fs) {

spin_lock(¤t->fs->lock);

if (!current->fs->in_exec) {

req->work.fs = current->fs;

req->work.fs->users++;

} else {

req->work.flags |= IO_WQ_WORK_CANCEL;

}

spin_unlock(¤t->fs->lock);

}

if (!req->work.task_pid)

req->work.task_pid = task_pid_vnr(current);

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L910

You can see that the reference to files_struct is passed to the req->work in the following code.

static int io_grab_files(struct io_kiocb *req)

{

......

if (fcheck(ctx->ring_fd) == ctx->ring_file) {

list_add(&req->inflight_entry, &ctx->inflight_list);

req->flags |= REQ_F_INFLIGHT;

req->work.files = current->files;

ret = 0;

}

......

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L4634

Then, before execution, these are replaced with the contents of the worker’s current (a macro that gets the task_struct currently running thread).

static void io_worker_handle_work(struct io_worker *worker)

__releases(wqe->lock)

{

struct io_wq_work *work, *old_work = NULL, *put_work = NULL;

struct io_wqe *wqe = worker->wqe;

struct io_wq *wq = wqe->wq;

do {

......

if (work->files && current->files != work->files) {

task_lock(current);

current->files = work->files;

task_unlock(current);

}

if (work->fs && current->fs != work->fs)

current->fs = work->fs;

if (work->mm != worker->mm)

io_wq_switch_mm(worker, work);

if (worker->cur_creds != work->creds)

io_wq_switch_creds(worker, work);

......

work->func(&work);

......

} while (1);

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io-wq.c#L443

Vulnerability explanation

Reference counter in files_struct structure when sharing with the worker

Now, let’s move on to the explanation of the vulnerabilities. In the code below (I posted earlier), you can see that the worker is passing a reference to the files_struct structure of the thread executing the system call to the structure that the worker will refer later without incrementing the reference counter.

static int io_grab_files(struct io_kiocb *req)

{

......

if (fcheck(ctx->ring_fd) == ctx->ring_file) {

list_add(&req->inflight_entry, &ctx->inflight_list);

req->flags |= REQ_F_INFLIGHT;

req->work.files = current->files;

ret = 0;

}

......

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L4634

By the way, as explained briefly earlier, when enqueueing a task in the Queue for asynchronous execution, the reference to the file structure is retained first from the specified file descriptor (passed to the io_kiocb structure).

static int io_req_set_file(struct io_submit_state *state, struct io_kiocb *req,

const struct io_uring_sqe *sqe)

{

struct io_ring_ctx *ctx = req->ctx;

unsigned flags;

int fd;

flags = READ_ONCE(sqe->flags);

fd = READ_ONCE(sqe->fd);

if (!io_req_needs_file(req, fd))

return 0;

if (flags & IOSQE_FIXED_FILE) {

if (unlikely(!ctx->file_data ||

(unsigned) fd >= ctx->nr_user_files))

return -EBADF;

fd = array_index_nospec(fd, ctx->nr_user_files);

req->file = io_file_from_index(ctx, fd);

if (!req->file)

return -EBADF;

req->flags |= REQ_F_FIXED_FILE;

percpu_ref_get(&ctx->file_data->refs);

} else {

if (req->needs_fixed_file)

return -EBADF;

trace_io_uring_file_get(ctx, fd);

req->file = io_file_get(state, fd);

if (unlikely(!req->file))

return -EBADF;

}

return 0;

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io_uring.c#L4599

So the worker does not have to retrieve it from the file descriptor again and does not need to refer to the files_struct structure. If so, it seems that there is no problem that the reference counter of the files_struct structure is not incremented(because it is not used).

But this assumption is not true in Linux Kernel 5.5 and later. This is because system calls that affect file descriptor tables, such as open/close/accept , are now available via io_uring. Obviously, these system calls affect the file descriptor table, so it looks like something can be used for exploitation However,

-

Even if you simply calls

open/close/acceptetc., nothing can happen if thefiles_structstructure is available.

— Of course, system calls have countermeasures when handling the same file by multiple threads, so it is not possible to simply cause a race condition between the calling thread and the worker. -

By freeing the

files_structwith setting reference counter to 0, a new process may reuse it as afiles_structfor that process. The worker will get a reference to the new process’sfiles_structwhen reused.

— Butfilestructure is already obtained from the file descriptor, ̶s̶o̶ ̶i̶t̶ ̶c̶a̶n̶n̶o̶t̶ ̶g̶e̶t̶ ̶a̶ ̶r̶e̶f̶e̶r̶e̶n̶c̶e̶ ̶t̶o̶ ̶t̶h̶e̶ ̶f̶i̶l̶e̶ ̶s̶t̶r̶u̶c̶t̶u̶r̶e̶ ̶o̶f̶ ̶t̶h̶e̶ ̶n̶e̶w̶ ̶p̶r̶o̶c̶e̶s̶s̶ (This was a lie. I’ll describe in “aside” part.)

— It’s possible to insert afilestructure into the file descriptor table of a new process by opening a file. But it will not be referenced. (Because people don’t use fixed file descriptor number while programming.)

Here, I will explain the mechanism around the reference counter of the file structure in countermeasures when handling the same file by multiple threads. Yes, it’s a spoiler. The conclusion will be that it can actually be abused.

Mechanism of reference counter in open/close system call

To understand how the reference counters in the file structure work, we first need to understand what open/close actually does. Of course, the behavior changes depending on the actual file to be opened, but the following can be said in common.

open:

-

Create a file structure and set the reference counter to 1

-

Regesiter it to the file descriptor table

Create a file structure and set the reference counter to 1

static struct file *__alloc_file(int flags, const struct cred *cred)

{

struct file *f;

int error;

f = kmem_cache_zalloc(filp_cachep, GFP_KERNEL);

......

atomic_long_set(&f->f_count, 1);

......

return f;

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/file_table.c#L96

Regesiter it to the file descriptor table(fd_install)

static long do_sys_openat2(int dfd, const char __user *filename,

struct open_how *how)

{

......

fd = get_unused_fd_flags(how->flags);

if (fd >= 0) {

struct file *f = do_filp_open(dfd, tmp, &op);

if (IS_ERR(f)) {

put_unused_fd(fd);

fd = PTR_ERR(f);

} else {

fsnotify_open(f);

fd_install(fd, f);

}

}

putname(tmp);

return fd;

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/open.c#L1130

close:

-

Delete from file descriptor table

-

Decrement the reference counter of the file structure.(fput)

Delete from file descriptor table

int __close_fd(struct files_struct *files, unsigned fd)

{

struct file *file;

struct fdtable *fdt;

spin_lock(&files->file_lock);

fdt = files_fdtable(files);

if (fd >= fdt->max_fds)

goto out_unlock;

file = fdt->fd[fd];

if (!file)

goto out_unlock;

rcu_assign_pointer(fdt->fd[fd], NULL);

__put_unused_fd(files, fd);

spin_unlock(&files->file_lock);

return filp_close(file, files);

out_unlock:

spin_unlock(&files->file_lock);

return -EBADF;

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/file.c#L626

Decrement the reference counter of the filefile structure.(fput)

int filp_close(struct file *filp, fl_owner_t id)

{

......

fput(filp);

return retval;

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/open.c#L1239

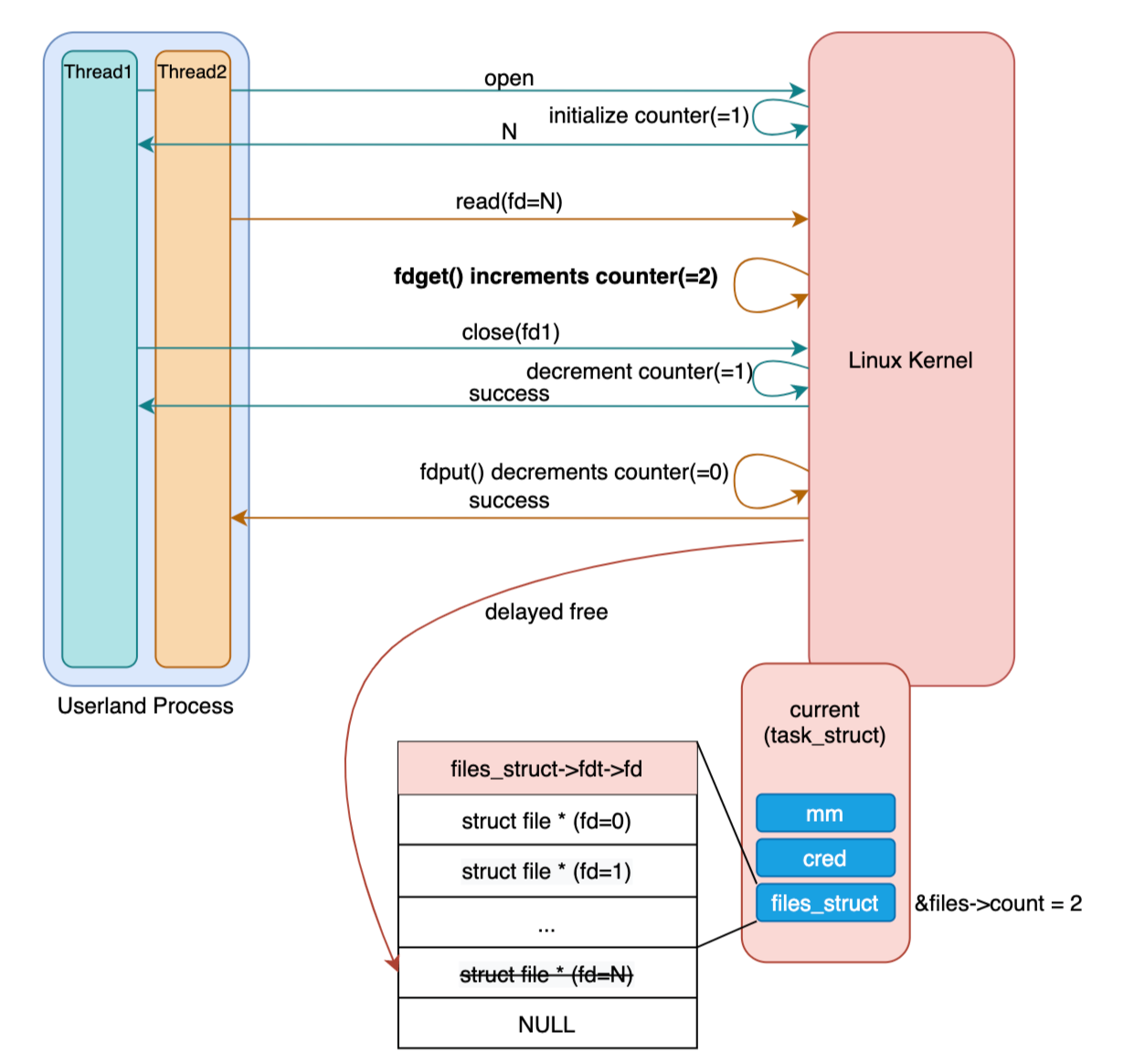

The important thing here is the fget()/fput() function (although fget() is not used in open). These increment/decrement the reference counter of the file structure, and fput() frees the memory of the file structure when the reference reaches 0. Thanks to this mechanism, if it gets the file structure by fget(), the reference counter will not be 0 even if it is closed before fput() (counter should be 1 at the time of open, 2 after calling fget(), and even if it’s close-ed at this time, it will be 1.). Therefore, it means that there is no problem even if it is closed during use.

For example, when mapping a file to memory with mmap , it would be a problem if the memory was released before calling munmap even after close. Therefore, fget() is used in mmap to prevent the memory from being released.

unsigned long ksys_mmap_pgoff(unsigned long addr, unsigned long len,

unsigned long prot, unsigned long flags,

unsigned long fd, unsigned long pgoff)

{

struct file *file = NULL;

unsigned long retval;

if (!(flags & MAP_ANONYMOUS)) {

audit_mmap_fd(fd, flags);

file = fget(fd);

......

}

https://elixir.bootlin.com/linux/v5.6.19/source/mm/mmap.c#L1551

fdget() which doesn’t change reference counter

There is also a function called fdget()/fdput() that is frequently used to get a reference to a file structure (which is rather frequently used inside system call handlers).

For example, in the read system call, the file structure is used between fdget()(fdget_pos()) and fdput()(fdput_pos()) as shown below.

ssize_t ksys_read(unsigned int fd, char __user *buf, size_t count)

{

struct fd f = fdget_pos(fd);

ssize_t ret = -EBADF;

if (f.file) {

loff_t pos, *ppos = file_ppos(f.file);

if (ppos) {

pos = *ppos;

ppos = &pos;

}

ret = vfs_read(f.file, buf, count, ppos);

if (ret >= 0 && ppos)

f.file->f_pos = pos;

fdput_pos(f);

}

return ret;

}

SYSCALL_DEFINE3(read, unsigned int, fd, char __user *, buf, size_t, count)

{

return ksys_read(fd, buf, count);

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/read_write.c#L576

It seems that there is a motivation to not increase or decrease the reference counter of the file structure too often, probably due to the influence of the cacheline. Therefore, fdget() does not increase the reference counter of the file structure under certain conditions. As you can see by tracing the function, fdget() finally calls __fget_light() function. Let’s take a look at the implementation.

/*

* Lightweight file lookup - no refcnt increment if fd table isn't shared.

*

* You can use this instead of fget if you satisfy all of the following

* conditions:

* 1) You must call fput_light before exiting the syscall and returning control

* to userspace (i.e. you cannot remember the returned struct file * after

* returning to userspace).

* 2) You must not call filp_close on the returned struct file * in between

* calls to fget_light and fput_light.

* 3) You must not clone the current task in between the calls to fget_light

* and fput_light.

*

* The fput_needed flag returned by fget_light should be passed to the

* corresponding fput_light.

*/

static unsigned long __fget_light(unsigned int fd, fmode_t mask)

{

struct files_struct *files = current->files;

struct file *file;

if (atomic_read(&files->count) == 1) {

file = __fcheck_files(files, fd);

if (!file || unlikely(file->f_mode & mask))

return 0;

return (unsigned long)file;

} else {

file = __fget(fd, mask, 1);

if (!file)

return 0;

return FDPUT_FPUT | (unsigned long)file;

}

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/file.c#L807

As commented, this function can only be used if the conditions are met. It also says, “no refcnt increment if fd table isn’t shared”. What does this mean?

Generally, in multithreaded programs, the file descriptor table is shared(&files->count >=2), and the same file descriptor points to the same file. In this case, for example, the other thread can call the close system call while the read system call is being executed. Therefore, the fdget() of the read system call should increment the reference counter.

But what if this was a regular single-threaded program? In this case, another system call cannot be interrupted while the read system call is being executed. Therefore, nothing happens even if the reference counter is not increased.

For this reason, it does not increase the reference counter of the file structure unless the file descriptor table is shared.

Combining vulnerabilities with the fdget() spec

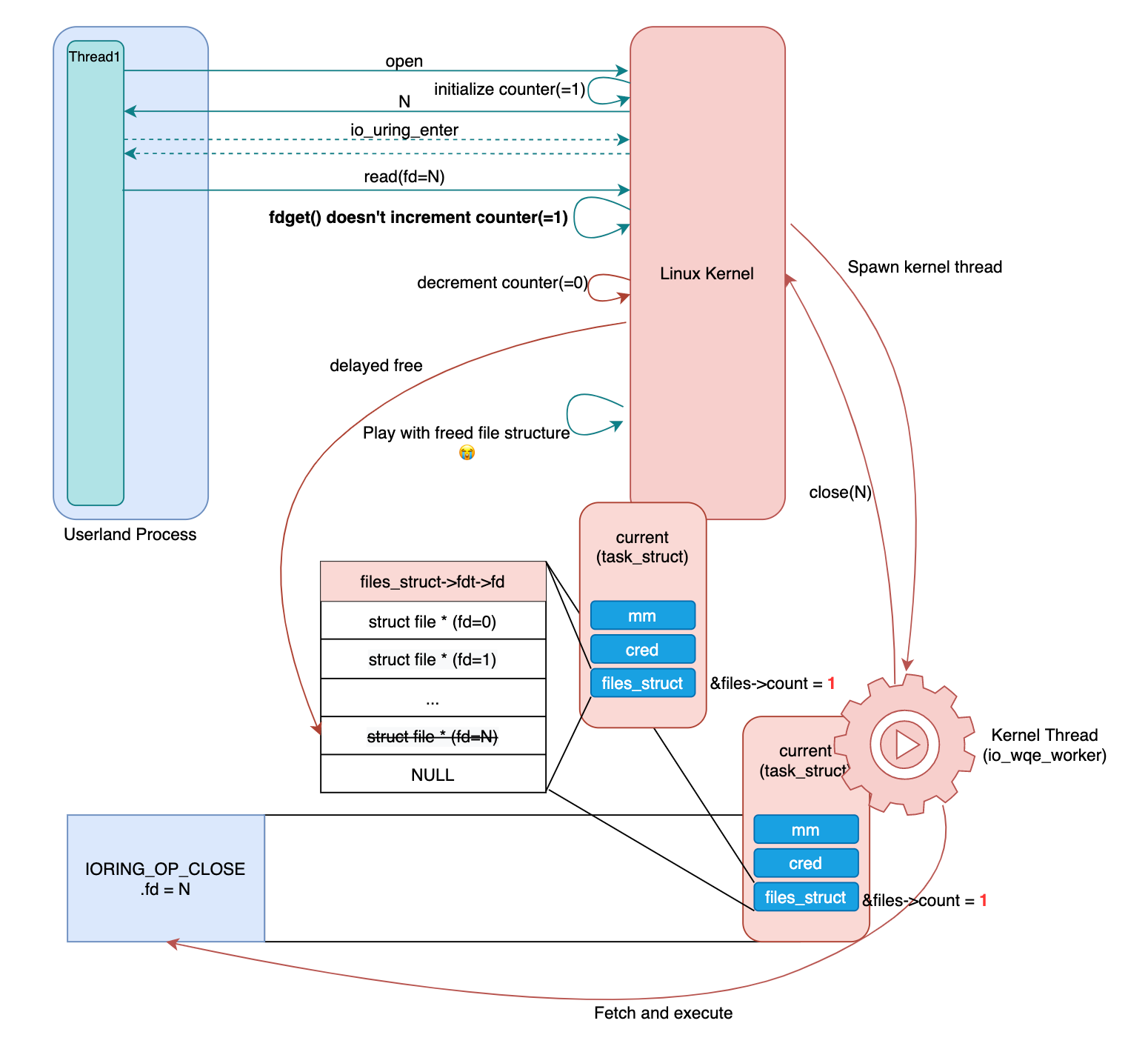

The vulnerability was passing a reference to the files_struct structure to a structure that the worker would later refer to without increasing the reference counter. As you may have noticed, this means that if the original program is single- threaded, although the file descriptor table is shared (with worker), &files->count is 1.

That &files->count equals to 1 means **fdget() does not increment the reference counter for the file structure**. But, actually the worker can closec the file descriptor associated with the file structure, so the memory of the file structure obtained by fdget() may have been freed at some point.

In summary, this vulnerability is as follows.

-

The aio worker shares the

files_structstructure with the calling thread. At this time, the reference counter of thefiles_structstructure is not incremented. -

Since fdget does not increment the reference counter of the

filestructure when the reference counter of thefiles_structstructure is 1, the file obtained byfdget()may be closed and freed in the worker (or calling thread). -

Since the

filestructure is freed, UAF occurs where it is handled(e.g. in file-related system call).

Overview of the PoC

The rest thing is just doing a kernel exploit, so there’s not much to explain.

If there’s a code block like below, you can use close on the worker side to trigger Use After Free of the file structure (it’s even better if you put a userfaultfd between them).

void func(){

struct fd f;

f = fdget();//refcount is not incremented.

/*

Play with f.file :)

*/

fdput(f);

}

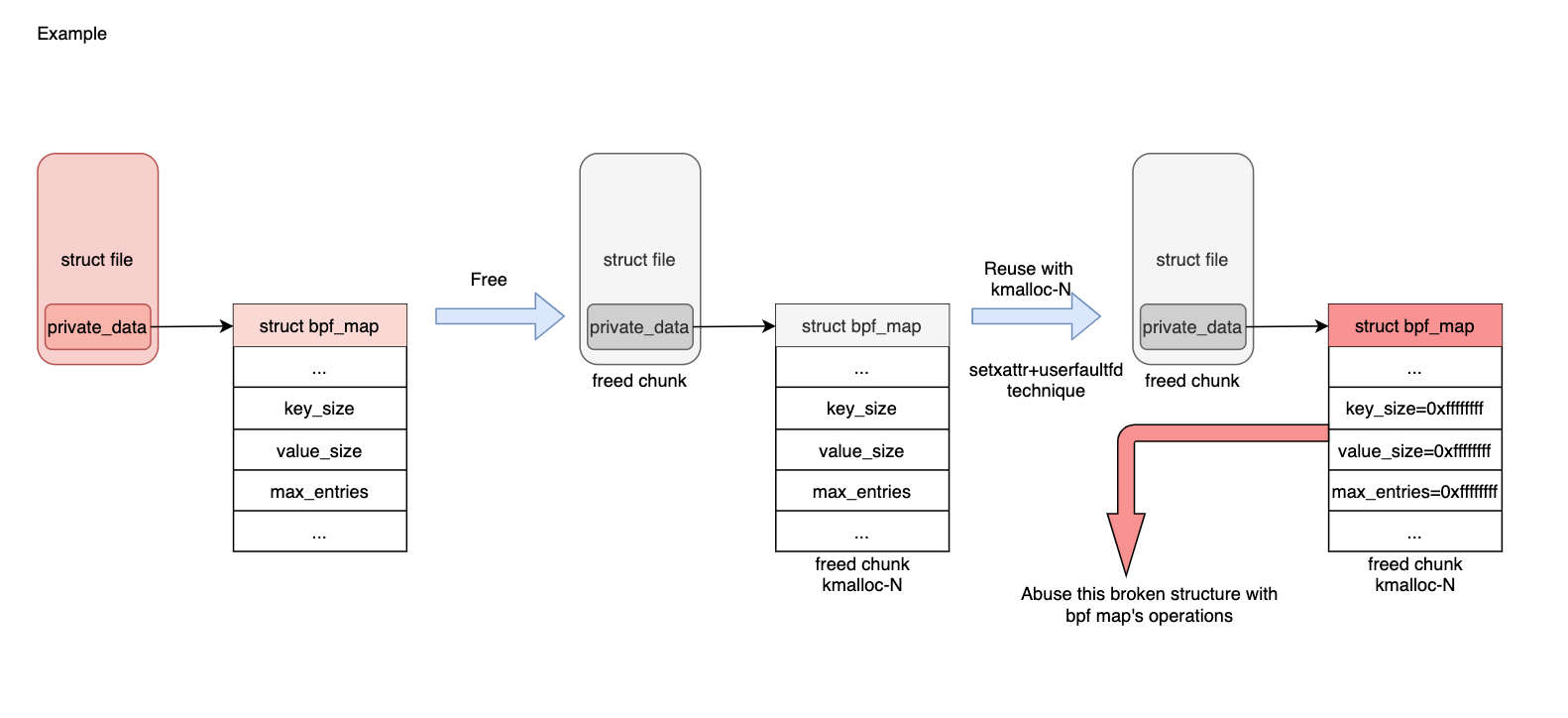

Or it’s also possible to exploit by using the memory region of private_data member associated with the file structure (the location to save its own data structure. it contains many kinds of data structure), because it will also be freed. I exploited by overwriting the memory of the map structure used in eBPF which is allocated(overlapped) by calling kmalloc with the same size as map structure.

Summary

It seems that it was fixed by changing the reference counter of the files_struct structure to increment in the following commit.

https://github.com/torvalds/linux/commit/0f2122045b946241a9e549c2a76cea54fa58a7ff

As an aside

While writing this blog, I noticed an important thing. After my report, the following issue was raised, and CVE was assigned there.

https://bugs.chromium.org/p/project-zero/issues/detail?id=2089

Apparently, there was another report while the response is delayed because the issue cannot be reproduced well. And it seems that previous one was corrected first. And that seems to have been fixed first.

Basically, I am reporting the problematic code with the file name and the number of lines specified, but there seems to be room for improvement in the report content or PoC.

Also, after reading the report on the above URL, I realized that there is a simpler and more interesting Exploit method, so I would like to introduce it briefly.

Even if the worker is running, the reference counter of the files_struct structure is not incremented, so the current->files->count of the thread which calls the io_uring-related system call is 1 due to the vulnerability.

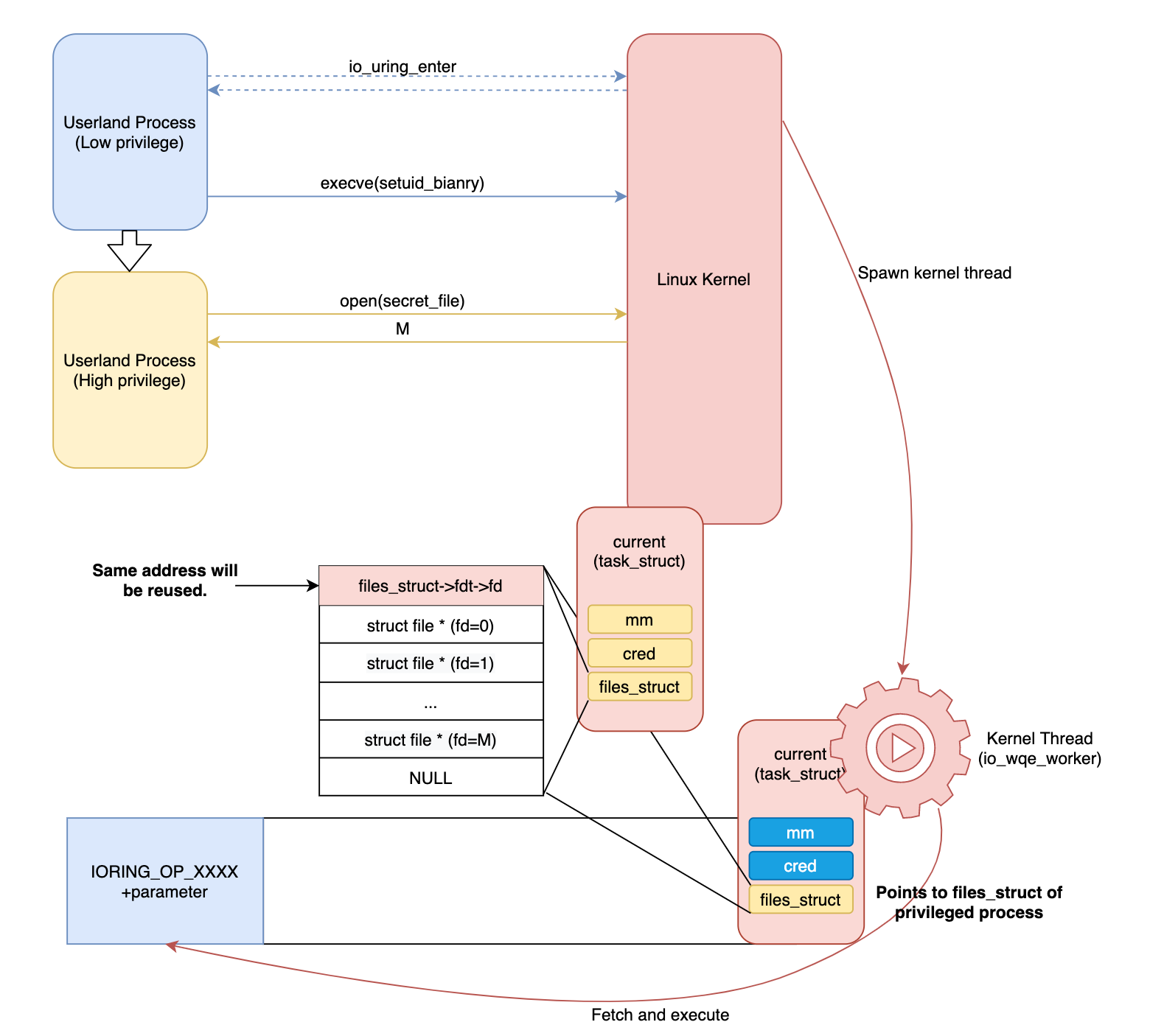

Also, when updating the executable file with execve as shown in the code below, there is a specification that the files_struct structure is reused under the condition of current-> files-> count == 1.

load_elf_binary()->begin_new_exec()->unshare_files()->unshare_fd()

static int unshare_fd(unsigned long unshare_flags, struct files_struct **new_fdp)

{

struct files_struct *fd = current->files;

int error = 0;

if ((unshare_flags & CLONE_FILES) &&

(fd && atomic_read(&fd->count) > 1)) {

*new_fdp = dup_fd(fd, &error);

if (!*new_fdp)

return error;

}

return 0;

}

https://elixir.bootlin.com/linux/v5.6.19/source/kernel/fork.c#L2883

In other words, if execve is called while the worker is running, the worker will always refer to the files_struct structure of the process after the execve.

(Actually I think it’s easy to duplicate an address even if kmem_cache_free&kmem_cache_alloc is called…)

The process after execve does not always have the same authority as the process before it. For example, if setuid-ed binaries(sudo/su/etc…) are executed, it will become a privileged process after execve. Therefore, by suspending the execution of the worker and then executing sudo or things like that, the worker can refer to the file descriptor table (in files_struct) of the privileged process.

Since the cred(process authority) structure and things like that are inherited from the state before execve()(it is also held on the worker side as needed when queueing the task), it cannot be newly opened with the authority of the privileged process. But the files opened by the privileged process itself can be referenced from the worker side.

static void io_wq_switch_creds(struct io_worker *worker,

struct io_wq_work *work)

{

const struct cred *old_creds = override_creds(work->creds);

worker->cur_creds = work->creds;

if (worker->saved_creds)

put_cred(old_creds); /* creds set by previous switch */

else

worker->saved_creds = old_creds;

}

https://elixir.bootlin.com/linux/v5.6.19/source/fs/io-wq.c#L431

This means that there’s a possibility of LPE by reading/writing file descriptors opened by privileged processes. (For example, if a shell script that will be executed as root is opened as writable, it can be used for privilege escalation.)

By the way, strictly speaking, as mentioned above, the file structure to read/write is obtained based on the file descriptor before offloading, so the file structure of the privileged process cannot be used. However, in fact, io_uring has a feature that allows you to define file descriptors on the side of execution context, and it can dynamically updates them by the operation IORING_OP_FILES_UPDATE. This obtains file descriptors again from the files_struct structure held on the execution context side, which means that there is room for stealing the file descriptor of the privileged process.

I haven’t confirmed whether there is a convenient executable file that can actually be used for exploitation. At least, sudo temporarily opens /etc/shadow with O_RDONLY , so it seems that you can get the contents if the timing is right.

Also, depending on the version, the file structure is updated by referring to the memory on the privileged process (it means, it is necessary to specify the address of the privileged process as the address of the file descriptor table when updating). So I felt like it was affected by ASLR (A suid binary is required to immediately stabilize the memory reuse of the files_struct structure, but of course su/sudo binaries are built as PIE). ( I justify my blog with that excuse. :) )

About us

Flatt Security Inc. provides security assessment services. We are willing to have offers from overseas. If you have any question, please contact us by https://flatt.tech/en/ . Thank you for reading this article.

Reference

https://www.zerodayinitiative.com/advisories/ZDI-21-001/

https://github.com/torvalds/linux/commit/0f2122045b946241a9e549c2a76cea54fa58a7ff

https://bugs.chromium.org/p/project-zero/issues/detail?id=2089